Building an Assessment Tool

After years of manual labor, Shift developed a successful assessment strategy to assist the Department of Defense. Could the company now streamline the process and deliver the scalable end product promised?

Evaluator Engine | 2022 - Present

During my first conversation with the CEO in January 2021, I wanted to see if he could describe the distant horizon he was attempting to reach. After transitioning from a consultant to a full-time role, I occasionally thought the vision had changed. That sort of thing happens all the time in small, agile companies.

Except, the vision didn’t go away, and in late 2022 it became our top priority.

The goal was deceptively simple — transform a people-heavy process for candidate assessment into a scaleable solution. It was the most duct taped of duct tape startup projects I have seen. Worse, the whole thing had a cloak-and-dagger feel as the project was a deliverable attached to a government contract the company secured (which is why I won’t be going into great detail or showing images of this project).

On the upside, the team manually weaving this together was in their 16th iteration. This program involves placing current military personnel in de facto internships with partners who act as host companies. The process could produce dependable results but lacked the ability to scale in its manual state.

There was just one problem that continually vexed this project — extracting requirements was like cracking a safe. Through multiple rounds of work led by PMs Gabrielle Castaldo and Craig DeClerck, a robust user journey emerged and successfully translated the multi-faceted process into something actionable.

of note

Due to the sensitive nature of this project, I’m primarily discussing our approach to scaling and refining the product rather than revealing specific details about the product function or usage of the product output.

Here come the tiers

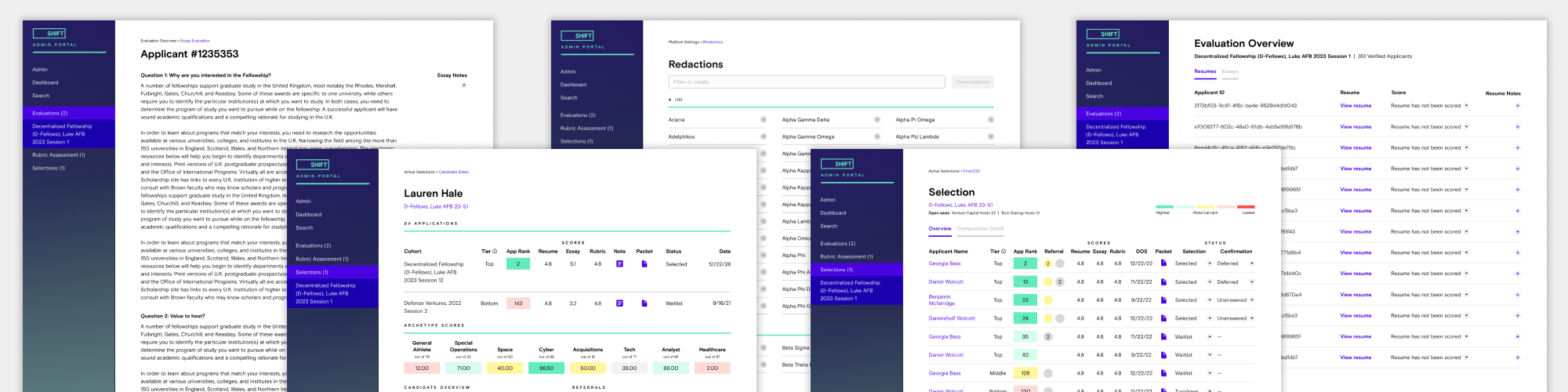

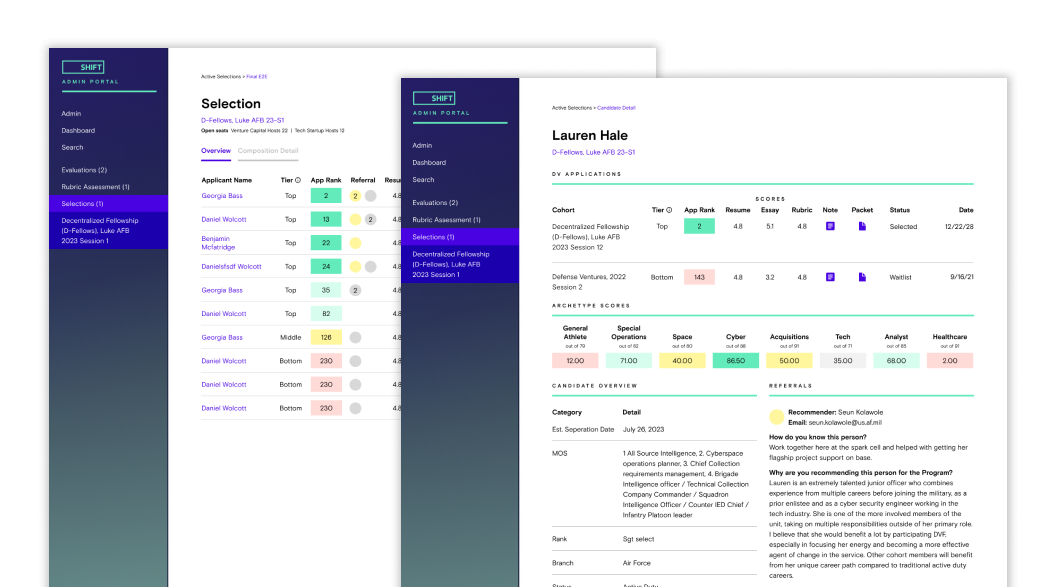

Unlike our other applications, whose user structures are mainly flat, Evaluator Engine strives to empower some (but not all) of our internal users to augment the platform without input from the engineering team.

When you have a small but mighty team, navigation for a multiple-tiered site could require significant engineering lift to build and maintain the logic needed to address tier-based information display. Worse, early tests of the tool lagged on the initial dashboard load. While we could have burned a sprint attempting to refactor, splitting the page into specific ‘Admin’ and ‘Dashboard’ pages was far more efficient.

Changes like the one described above directly impact team sanity and stability over time. While I have no management authority over our engineers, I respect the value they add to our organization. Additionally, our changes are noticeable only to our highest-level admins and might positively impact their overall experience on the platform. When you can make concessions that allow your team to move faster without negatively impacting platform usability, it’s the only call to make.

Now, even less manual effort

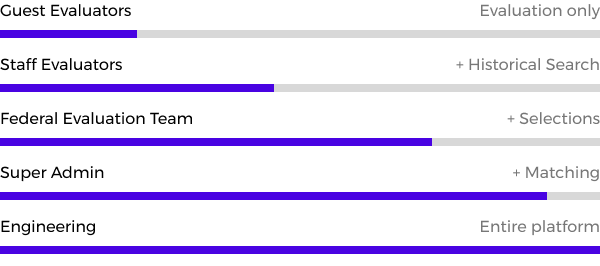

For the past two years, the company’s primary focus has been to eliminate as much manual process as possible. While we made great strides toward that goal with the initial launch of our internal tool, a significant piece of the story arc was missing.

The candidate submits their application for inclusion into this program via our Candidate App. A team of evaluators then assesses that submission and pairs the candidate with a suitable host company.

What determines a suitable candidate/partner match? That’s an overtly manual process, often trapped inside the head of one or two staff members. Bottlenecks are not something you want to scale.

The simple solution was to build a mechanism to collect preferences from these host companies and then automate the matches displayed in Evaluator Engine.

Unfortunately, Evaluator Engine is an internal tool, so where should we collect these preferences? Talent Tool, of course.

By leveraging the already established preference-gathering pattern, we lower the engineering lift needed to add this collection mechanism quickly. This means the team will have more time to integrate this new data into candidate matching, further automating what was once a completely manual activity.

Now simplify it further

Discovering opposing viewpoints is far more challenging when building internal tools for an existing process. Established processes are harder for people to imagine another way of accomplishing the task.

The main pain point eliminated by building this tool was for the company administrator who was assigning and measuring the status process. Getting people into the process and following progress was much more accessible in our new tool, but the assessment required the same amount of labor. While our paid assessors could commit the hours needed to complete the process, there was a significant dropoff when looking at the data from our volunteer assessors. After further review, it was clear that our paid assessors were running up the bill and our volunteers were producing incomplete data because of churn.

Market conditions eroded as the new tool wrapped up its first successful assessment period. The product worked, and reviewing hundreds of applications and building a cohort was easier than ever, but now layoffs would impact the size of the team that would use the tool.

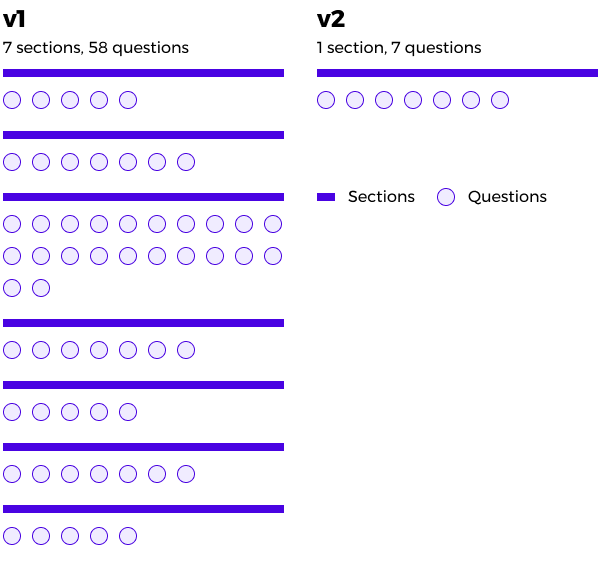

With fewer people, the process needed to streamline further. The obvious target was a 58-question rubric used to grade resumes and essays. It took the longest for assessors to complete, and all scores entered bubbled up to seven main categories. By keeping the categories but collapsing the underlying questions, we maintained the ability to historically compare candidate scores while reducing the rubric by 88%.

Up next: the robots

The main risk in making such a massive reduction to any tool is the loss of granularity. Confident we will lose some insight from our assessors, Shift is now leveraging artificial intelligence to provide another data point for consideration.

Trained on 20 cohorts of successful and failed candidates, A.I. will now act as backup for the assessment work performed by its human counterparts. While human assessors use a seven-question rubric, the A.I. will apply the original 58-questions. Team admins will compare the scores produced by these two approaches and recommend adjustments as needed.

While tests are underway, success here would provide the company with a scalable pathway to significant growth.